Few financial institutions have yet to implement Generative Artificial Intelligence (GenAI) technology in their operations, however, both large and small FIs are actively experimenting with Gen AI, seeking the most impactful and safest use cases to pilot. The introduction of new technology into production always carries inherent risk, yet the risks associated with launching Generative AI are novel, untested, unpredictable, and poorly understood. Nevertheless, within the dynamic landscape of finance and technology, arguably the most significant risk is standing still.

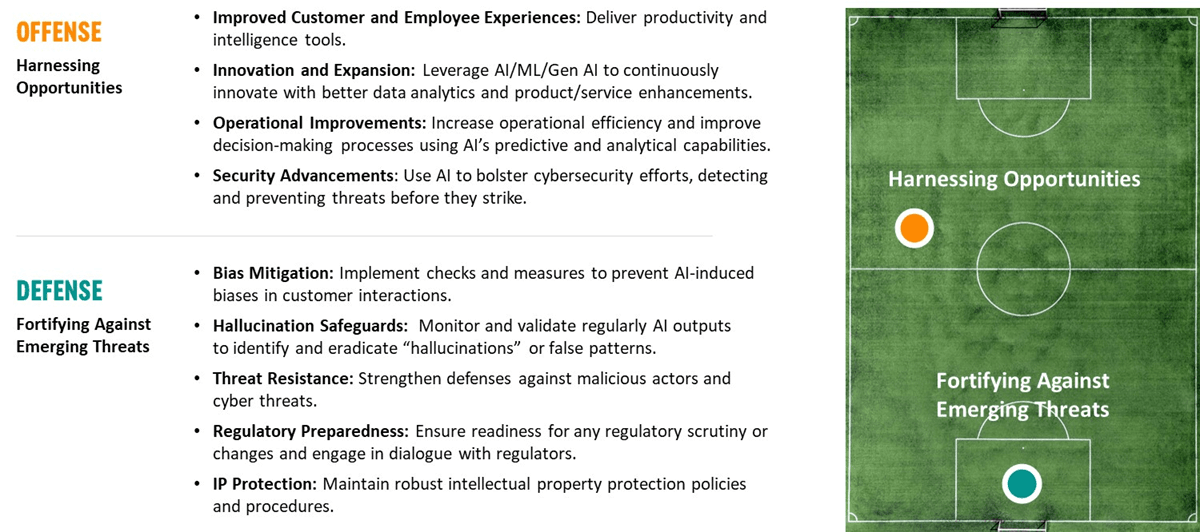

This report underscores the necessity for FIs to strike a balance between these risks and the substantial rewards that innovation in GenAI can bring. It emphasizes the importance of FIs embracing their roles as agents of industry progress rather than passive observers. As FIs integrate GenAI into their operations, two types of risk becomes evident. The first encompasses risks linked with deploying Gen AI internally, including model bias, hallucinations, false output, and data privacy concerns. The second covers external risks stemming from Generative AI, including regulatory violations, intellectual property infringement, and empowered bad actors.

The report delves into detail on the myriad risks of GenAI while providing strategies to mitigate them.