シャングリラを求めて ー コストが高いバンキングにおけるデータ管理の追求

Data management practices initially came to the fore in the aftermath of the 2008 financial crisis. The Basel Committee on Banking Supervision introduced BCBS 239 in 2013 that codified the requirements for risk data aggregation and reporting principles under Basel III standards. Major banks scrambled to meet the stringent requirements of “matters requiring attention” (MRAs) from the regulators that demanded stronger controls and better transparency into how credit, capital, and liquidity reports were constructed. The burden on the banks was to show where data elements were created, how that data flowed through a supply chain of applications and processes, where it was merged, split or transformed, where and how it was used in analytics, and subsequently made its way to regulatory reports.

However, even after a decade, managing data well can feel almost as elusive as finding the mystical valley of Shangri-la. That the uphill battle to overcome these challenges continues is underscored by Citi’s travails this summer, following a Federal Reserve exam in 2023. Senior leadership changes have occured following the announcement that the bank was fined by both the Federal Reserve and the OCC for making “insufficient progress” toward fixing data management issues that date back to 2020. The fine was a costly $136mn. Of course – this is just the most recent industry news, but many of the largest banks also struggle to manage data assets to the extremely high expectations of the regulators. The old adage “people in glass houses shouldn’t throw stones” comes to mind. Regarding data quality, this applies to other banks (and financial services analyst firms!), but especially to other industries too. Few other industries have their data quality inspected to the same level by federal departments and agencies!

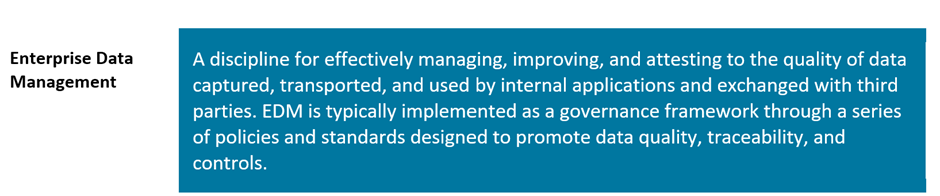

Getting data management right is hard, but it also requires priority and commitment at the highest levels of an organization. However, despite the challenges at Citi, according to the Enterprise Data Management Council’s biannual cross-industry benchmark survey[1], the financial services industry is generally considered the most mature in terms of data management and governance – at least as far as data used in regulatory reporting goes. It is fair to say that other industries have benefited from the maturation of governance models and data management tools driven by the needs of the financial services industry - (you are welcome)! Since then, banks have invested billions of dollars on enterprise data management (EDM) initiatives - primarily driven by these regulatory demands. But how much is enough?

The answer, unfortunately, is that data management and addressing data quality is a perpetual cost that takes resources (investment and people) away from initiatives that “change the bank.” This is a major reason many resent the cost of managing data, but there are other challenges too. The data rut (first published by Celent in August 2022) explains the challenges with mastering enterprise data. These are a mix of technology, process, people and–just as importantly–cultural challenges. The data management office’s work is near impossible if business units don’t take ownership of data quality and execute on remediating issues.

The Data Rut

Source: Celent

There is no quick fix, although if approached with a solid framework, data management does reap benefits and becomes less invasive over time. For any bank, announcements of AI achievements will ring hollow if there isn’t a demonstrable commitment to data quality.

[1]EDM Council 2023 Global Benchmark Report, EDM Council, March 2023