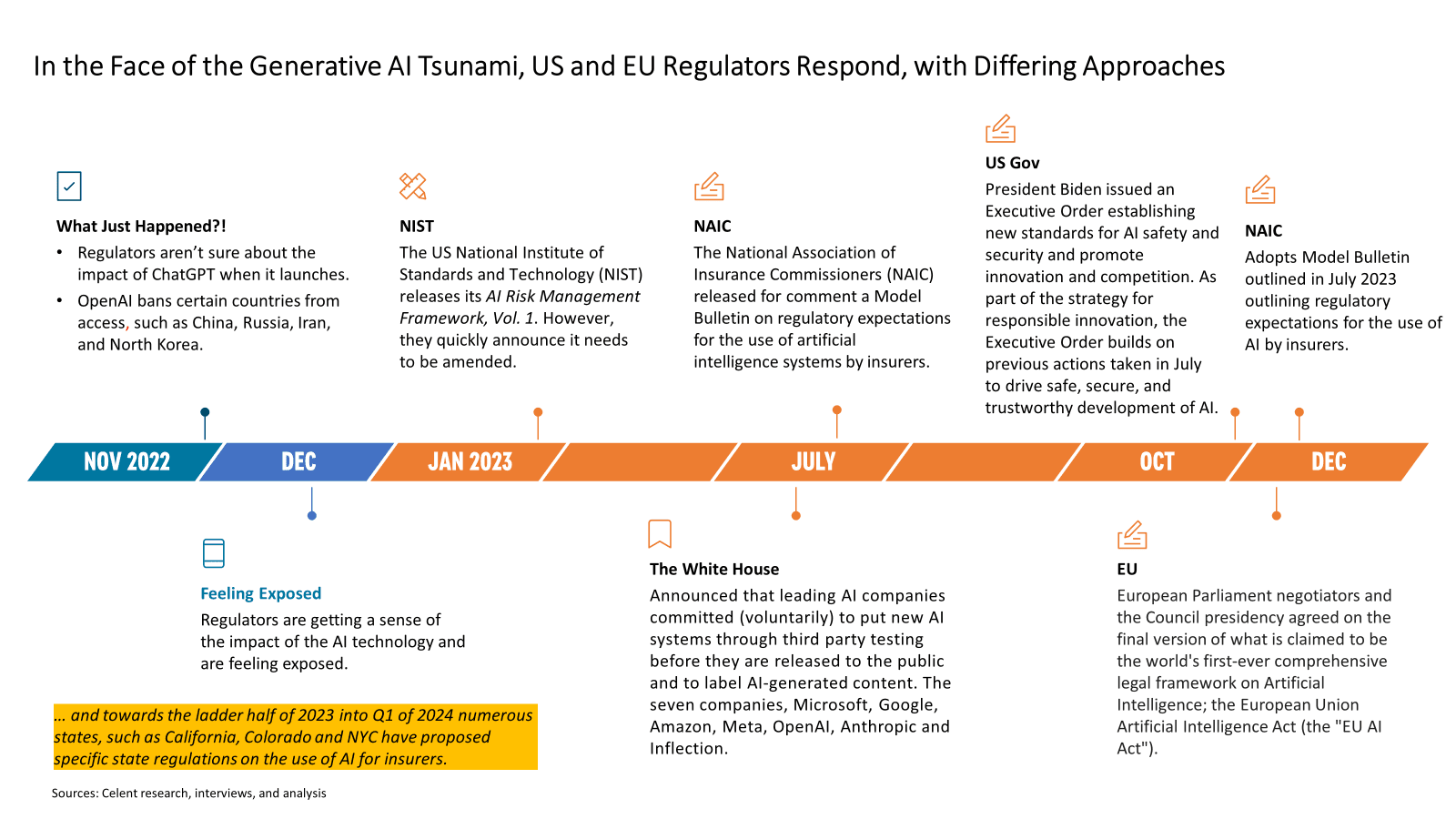

In July, 2023 the White House announced that seven leading AI companies committed to put new AI systems through third party testing before they are released to the public and to label AI-generated content. The seven companies, Microsoft, Google, Amazon, Meta, OpenAI, Anthropic and Inflection, made several voluntary commitments in an agreement with the White House with the goal of improving the safety and trustworthiness of the AI solutions they develop. One of the other objectives of the meeting was to provide Congress and the White House with more runway to design regulations in an area of technology that has taken the world by storm.

On Oct 30th, the Biden Administration issued an Executive Order establishing new standards for AI safety and security and promote innovation and competition. As part of the strategy for responsible innovation, the Executive Order builds on previous actions the Administration has taken, including work from the session in July 2023, that led to voluntary commitments from leading AI companies to drive safe, secure, and trustworthy development of AI.

Also in July, 2023, the National Association of Insurance Commissioners (NAIC) released for comment a Model Bulletin (Bulletin) on regulatory expectations for the use of artificial intelligence systems (AI Systems) by insurers. The Bulletin was released through the NAIC’s Innovation, Cybersecurity and Technology Committee. The Bulletin advises insurers of the information that insurance regulators may request during audits or investigations of the insurer’s AI Systems, including third-party AI Systems.

In December, 2023, tha NAIC adopted the Model Bulletin outlined in July outlining regulatory expectations for the use of AI by insurers. The Bulletin doesn’t go as far as requiring but encourages insurers to implement and maintain a board-approved written AI Systems Program. It recommends that the Program addresses governance, risk management controls, internal audit functions and third-party AI systems.

Also in December the European Parliament negotiators and the Council presidency agreed on the final version of what is claimed to be the world's first-ever comprehensive legal framework on Artificial Intelligence; the European Union Artificial Intelligence Act (the "EU AI Act"). The EU AI Act represents a significant shift towards a regulated AI ecosystem. For the insurance industry, it offers a unique opportunity to lead in ethical AI use, enhancing customer trust and driving innovation within a secure and compliant framework.

The EU AI Act stands as the first of its kind, aiming to regulate AI applications. For the insurance industry, this means navigating new compliance landscapes while leveraging AI for innovation and competitive advantage.

Global insurers with have to navigate the regulatory waters and the sailing will be choppy. The are key differences between the EU AI Act and the goals of the Executive Order.

Key Differences:

1) Regulatory Approach: U.S. favors a more decentralized, sector-specific approach, while the EU is moving towards a centralized, comprehensive framework.

2) Legal Binding: U.S. leans towards voluntary guidelines and adapting existing laws, whereas the EU proposes mandatory requirements for compliance and severe financial penalties for non-compliance.

3) Risk Management: Both regions employ a risk-based approach, but the EU explicitly categorizes AI systems by risk level with specific regulatory implications.

US Approach

- Decentralized and Sector-Specific: Regulation is highly distributed across various federal agencies, with a focus on adapting existing legal frameworks to AI.

- Risk-Based Approach: Emphasizes a risk management strategy that is tailored to the specific needs and challenges of different sectors.

- Voluntary Guidelines: Relies more on nonbinding guidance and frameworks, such as the AI Risk Management Framework by NIST and the Blueprint for an AI Bill of Rights.

- State-Level Initiatives: Significant variation in AI regulation at the state level, with states like California, Connecticut, New York and Vermont introducing their own legislation.

EU Approach

- Centralized and Comprehensive: Proposes a unified regulatory framework for AI, highlighted by the AI Act, which aims to create a harmonized set of rules across member states.

- Risk Categorization: Classifies AI systems into four risk categories (Unacceptable, High, Limited, Minimal) with corresponding regulatory requirements.

- Mandatory Requirements: Introduces binding legal requirements for high-risk AI systems, focusing on transparency, accountability, and data governance.

- Cross-Border Impact: Regulations designed to apply uniformly across all EU member states, facilitating a single market for AI products and services.

Given the EU AI Act is first out of the gate, it will likely have some influence on the Executive Order over time. As the US is developing its guidelines there will be a lot to learn from the EU’s pioneering first steps, and missteps. Regardless, insurance companies must conduct thorough impact assessments for their AI systems, ensuring compliance within the regulatory umbrella they are under. This includes revisiting AI strategies, data handling practices, and governance models to align with the new regulations.